From Static Logic to Smart Generation

For decades, configurators have powered CPQ systems in manufacturing, machinery, and retail.

They help customers and engineers build complex solutions visually and interactively.

But here’s the problem: today’s configurators are static.

They rely on hard-coded rules, rigid schemas, and legacy logic trees that can’t adapt to nuance.

The next generation is AI-driven — capable of understanding requests, suggesting configurations, or even generating entire product setups autonomously.

That’s what a client in the industrial sector asked me to explore:

“How far can we realistically take AI in a 3D configurator without losing control or reliability?”

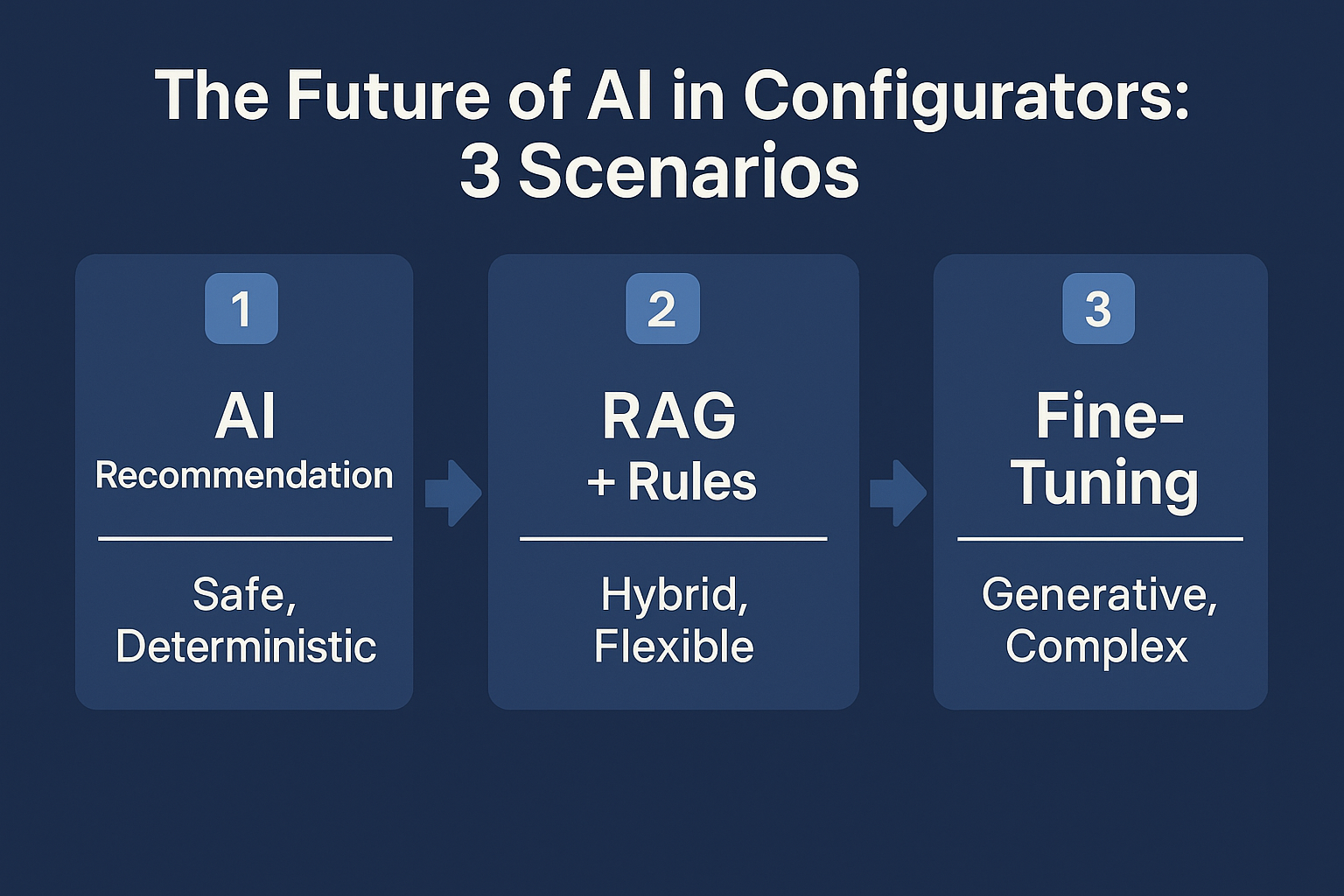

The result: three experimental setups that represent three stages of AI maturity — from a safe, deterministic recommender to a fully generative fine-tuned model.

Scenario 1 – The AI Recommender: Safe, Predictable, and Fast to Implement

This first scenario used AI as a recommendation layer on top of an existing configurator.

How It Works:

- Understanding the user’s request – The AI parses the text input and extracts all key parameters (dimensions, use case, constraints, etc.).

- Normalization – The parameters are converted into a standard format, so they can be compared against the product database.

- Database match – The system retrieves the top 3 pre-existing configurations.

- AI ranking – A lightweight model ranks those options based on semantic relevance and fit.

- Optional patching – If small adjustments are needed, the AI applies minor edits to the selected configuration.

What We Learned:

This approach worked flawlessly. It was deterministic, repeatable, and safe.

It never “hallucinated” invalid structures, because it only worked with existing data.

Pros:

- Reliable, explainable results

- Minimal development effort

- No fine-tuning or model training required

Cons:

- No creation of new configurations

- Limited flexibility for unseen product variants

Bottom line: A perfect entry-level AI integration for configurators — low risk, fast deployment, but no real innovation.

Scenario 2 – The Hybrid Model: RAG + Rules + Intelligent Assembly

This scenario combined the strengths of retrieval-augmented generation (RAG) and rule-based validation.

It’s where AI becomes a real co-engineer rather than a mere assistant.

Architecture Overview:

- Conversational intake:

The AI interacts with the user to fill missing details (“What’s the ceiling height?”, “Do you need open or closed shelves?”). - Embedding and RAG search:

The processed request is turned into vector embeddings and sent to Pinecone to retrieve matching components. - Component assembly:

A second AI model understands the schema (e.g., JSON layout or 3D object hierarchy) and tries to assemble the configuration logically. - Rule validation layer:

A deterministic validator checks and fixes geometry, collision rules, and XYZ positioning. - Final AI verification:

The last AI agent reviews the configuration and, if needed, asks clarifying questions before output.

Key Insight:

This hybrid setup successfully generated new, valid configurations, not just recommendations — while still maintaining deterministic control via rule validation.

Pros:

- Generates original configurations

- Still controllable through hard rules

- Flexible and modular (expandable for new domains)

Cons:

- High setup effort (rules per client or product line)

- Hard to scale for very large systems (e.g., entire retail stores)

Bottom line: The sweet spot between creativity and control. Perfect for medium-complexity systems that need both innovation and validation.

Scenario 3 – Full Fine-Tuning: Teaching the AI to Build Configurations from Scratch

The third scenario pushed the limits: we fine-tuned large language models (LLMs) to generate entire configurations autonomously.

The Process:

- Data Preparation:

Existing configurations were converted into structured JSONL training data, enriched with semantics and images to capture 3D context. - Training Environment:

- Fine-tuning performed on OpenAI’s GPT-4.1 and Fireworks AI (Llama 3, 5 GB)

- Cost: around €0.50 per training unit

- Duration: 2 epochs

- Hardware: 80 GB compute node

- Evaluation & Verification:

A self-validation script checked schema integrity, data consistency, and parameter coherence. - Results:

- GPT-4.1 fine-tuned model → 100% valid JSON output, fully schema-compliant

- Llama 3 (5 GB) → 80–90% structural accuracy, acceptable for internal tools

- Technical Limits:

- Context window: 64k–128k tokens → only configurations up to ~100 KB

- GDPR: OpenAI-based training not suitable for EU-sensitive data

- Cost: full-scale fine-tuning can exceed €100,000 for production systems

Lessons Learned:

Fine-tuned GPT models can replicate complex structures perfectly.

Even with small datasets, they quickly reach production-ready quality — ideal for proof-of-concepts.

However, open-source models remain better suited for enterprise deployments due to cost, privacy, and control.

Pros:

- Full automation and creative freedom

- Fast POC results

- High consistency with the right dataset

Cons:

- High cost and maintenance

- Data protection challenges (especially in the EU)

- Retraining required after schema changes

Bottom line: The future of AI configurators — but not cheap or compliance-friendly yet.

Scenario Comparison

| Criteria | Scenario 1: Recommender | Scenario 2: RAG + Rules | Scenario 3: Fine-Tuning |

|---|---|---|---|

| New configuration generation | ❌ | ✅ | ✅ |

| Determinism / reliability | ✅ | ✅ | ⚠️ Partial |

| Implementation cost | 💶 Low | 💶💶 Medium | 💶💶💶 High |

| GDPR / data control | ✅ | ✅ | ⚠️ Conditional |

| Maintenance effort | Low | High | Very high |

| Time to POC | Fast | Moderate | Moderate |

| Scalability | Limited | Medium | High (with budget) |

Practical Takeaways for AI Engineers & Product Leaders

- Modularize configurations.

Train the AI on sub-assemblies instead of massive monolithic structures. - Add a validation layer.

Geometry, semantics, and compatibility checks are mandatory — not optional. - Plan for retraining.

Budget 20–30% for re-training whenever your schema or product logic evolves. - Optimize RAG hygiene.

Feed only validated components into your vector database (Pinecone, pgvector, etc.). - Stay GDPR-compliant.

Use local or EU-hosted models (Mistral, Llama 3) for enterprise deployments. - Start lean.

Begin with a simple recommendation prototype (Scenario 1), then scale to Scenario 2 once your data and logic are ready.

Final Thoughts – Where Configurators Are Headed

AI won’t replace configurators. It will redefine them.

The static “rule trees” of the past decade will evolve into adaptive, self-learning configuration systems — able to understand goals, infer intent, and generate valid 3D setups dynamically.

The technology is already here: RAG and fine-tuned LLMs can interpret engineering logic, manage components, and build end-to-end configurations faster than traditional software ever could.

The real challenge isn’t the AI.

It’s balancing creativity, determinism, and compliance — without losing control of cost or governance.

If your company builds or operates a 3D configurator, CPQ system, or digital twin, and you’re exploring AI-driven configuration or RAG architecture,

I can help you design a proof-of-concept — from architecture to deployment.

Leave a Reply